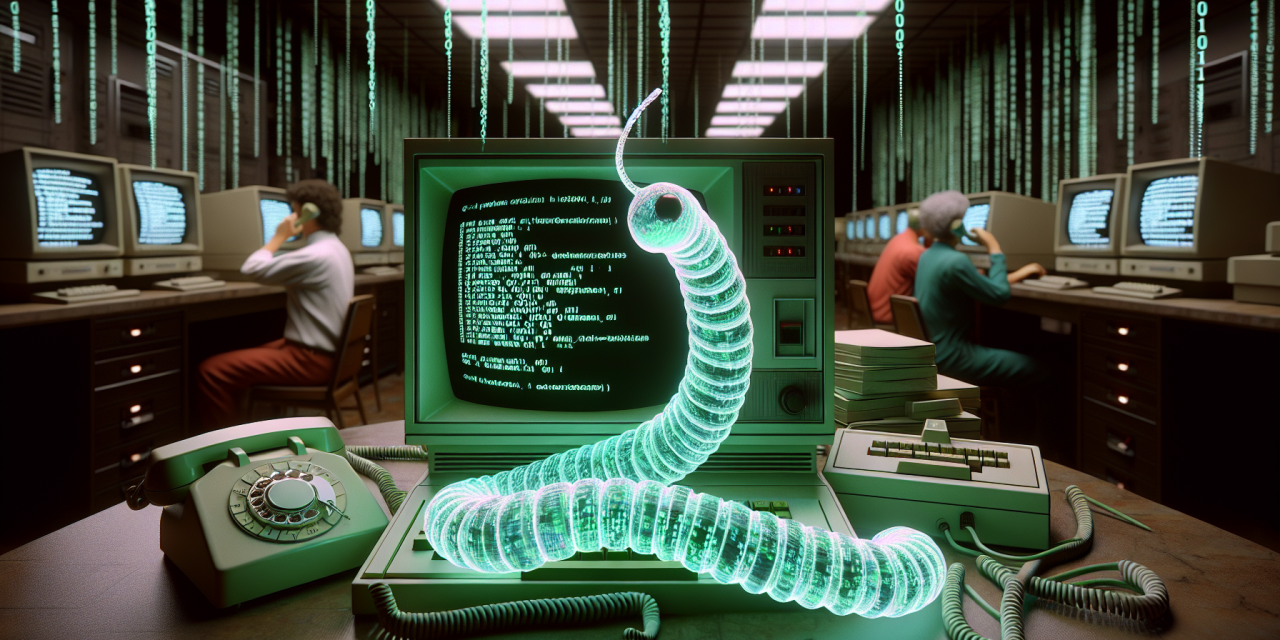

Picture this: It’s November 2, 1988, and you’re sitting at your computer terminal, watching the green text slowly crawl across your monochrome screen. The early internet—then called ARPANET—connects universities and research centers in a quiet digital web. Suddenly, your computer starts running strangely slow. Files are duplicating. Memory is filling up. And across the country, thousands of other computers are experiencing the exact same thing.

You’ve just witnessed the birth of the world’s first major internet catastrophe: the Morris Worm. But here’s the fascinating part—it wasn’t meant to be a disaster at all.

What Exactly Is a Computer Worm?

Before we dive into this digital drama, let’s clear up what we mean by a “worm.” Think of it like a biological parasite, but instead of living in your stomach, it lives in computer networks. Unlike a virus that needs you to accidentally run an infected file, a worm can spread all by itself, crawling from computer to computer through network connections.

Imagine if you had a magic piece of paper that could photocopy itself and then mail those copies to everyone in your address book—and then those copies would mail themselves to everyone in their address books, and so on. That’s essentially how a computer worm works, except instead of paper, it’s copying program code, and instead of the postal service, it’s using network connections.

The Morris Worm was special because it was the first one to prove just how interconnected and vulnerable our digital world had become.

Meet the Accidental Internet Vandal

Robert Tappan Morris wasn’t your typical troublemaker. He was a 23-year-old graduate student at Cornell University, the son of a cryptographer who worked at Bell Labs, and by all accounts, a brilliant programmer. Morris didn’t set out to bring the internet to its knees—he just wanted to explore.

His goal was simple: create a program that could travel across the network and count how many computers were connected to the early internet. It was meant to be a harmless census-taker, quietly visiting computers, making a note, and moving on. Think of it like a digital door-to-door surveyor with a clipboard.

But Morris made a crucial programming mistake—one that turned his innocent explorer into a digital disaster.

When Good Code Goes Bad

Here’s where the story gets really interesting from a coding perspective. Morris built his worm to be clever about not infecting the same computer twice. When it arrived at a new machine, it would first check: “Has someone like me been here before?” If the answer was yes, it would usually move on to avoid detection.

But Morris worried that system administrators might figure out his worm and create fake “I’ve been infected” signals to block it. So he programmed in a special rule: even if a computer claimed to already be infected, the worm would ignore that claim and infect it anyway—but only one time out of every seven attempts.

This is like a door-to-door surveyor who, even when you say “I’ve already been surveyed,” replies, “Well, maybe you’re lying,” and forces their way in 14% of the time anyway. In the isolated test environment Morris had been using, this seemed reasonable. In the real world, it was catastrophic.

The Digital Domino Effect

When Morris released his worm on November 2, 1988, it spread far faster than he’d anticipated. Remember, this was an era when computer security was based mostly on trust and obscurity. Passwords were often simple, network protocols had built-in vulnerabilities, and the internet was small enough that most administrators knew each other personally.

The worm exploited several clever tricks to spread itself. It took advantage of a bug in the “sendmail” program (which handled email), guessed common passwords, and used a buffer overflow in the “finger” service (which let you check if users were logged in). These weren’t particularly sophisticated attacks by today’s standards, but they were devastatingly effective for their time.

Within hours, the worm had infected an estimated 10% of all internet-connected computers—roughly 6,000 machines. But here’s the kicker: because of that “one-in-seven” reinfection rule, many computers were getting infected multiple times. Each new copy consumed memory and processing power, causing systems to slow to a crawl or crash entirely.

Pandemonium in the Pre-Web World

Imagine if suddenly every telephone in your city started making random calls to other phones, which then started making more random calls, until the entire phone system jammed with overlapping conversations. That’s essentially what happened to the early internet.

System administrators found themselves in a digital nightmare. They couldn’t communicate with each other because the networks were clogged. They couldn’t easily share solutions because email systems were down. Some resorted to calling each other on actual telephones—remember those?—to coordinate their response.

The economic impact was enormous. Universities shut down their network connections entirely. Research projects ground to a halt. Some estimates put the total damage at over $10 million in 1988 dollars—equivalent to more than $20 million today.

But perhaps more significantly, the Morris Worm shattered the internet community’s sense of security and trust. The digital frontier suddenly felt a lot less friendly.

The Birth of Digital Security

Every crisis creates opportunity, and the Morris Worm was no exception. Its rampage led directly to the creation of the Computer Emergency Response Team (CERT) at Carnegie Mellon University, which became the first organization dedicated to coordinating responses to internet security incidents.

Before the worm, cybersecurity was largely an afterthought. System administrators were more worried about hardware failures than malicious code. The Morris Worm proved that in our interconnected world, one person’s programming mistake could become everyone’s emergency.

Think of it like food safety regulations. Before major outbreaks, restaurants pretty much policed themselves. But once people realized how quickly a problem could spread through the supply chain, we developed health inspectors, safety protocols, and rapid response systems. The Morris Worm served a similar wake-up call for digital security.

Justice and Consequences

Morris became the first person ever convicted under the Computer Fraud and Abuse Act. He faced up to five years in prison and a $250,000 fine, but ultimately received three years probation, 400 hours of community service, and a $10,050 fine. The relatively light sentence reflected the fact that Morris had no malicious intent—he was essentially punished for being recklessly curious.

Interestingly, Morris’s career didn’t end with his conviction. He went on to become a professor at MIT and later co-founded a company called Viaweb, which he sold to Yahoo for $49 million. He also co-founded Y Combinator, one of the most influential startup accelerators in Silicon Valley. Sometimes being the person who breaks something first makes you the best person to understand how to fix it.

Lessons That Echo Today

The Morris Worm taught us several crucial lessons that remain relevant in our smartphone-and-social-media world. First, small programming errors can have massive real-world consequences when they interact with complex systems. Second, security can’t be an afterthought in an interconnected world—it needs to be built in from the beginning.

Perhaps most importantly, the Morris Worm demonstrated that the power to cause widespread disruption was no longer limited to governments or large organizations. A single graduate student, working alone, could bring down a significant portion of the world’s digital infrastructure.

Today’s cybersecurity challenges make the Morris Worm look quaint by comparison. Modern malware can steal personal information, hold data for ransom, manipulate financial markets, or even interfere with power grids. But the fundamental lesson remains the same: in our digitally connected world, we’re only as secure as our weakest link.

The next time your computer asks you to install a security update, remember Robert Morris and his accidental internet catastrophe. That little notification represents decades of hard-learned lessons about what happens when curiosity meets poor security—and why we all have a stake in keeping our digital neighborhoods safe.